Why NVIDIA GPUs are the Ultimate Choice for Deep Learning and Generative AI

The global shift towards Artificial Intelligence (AI), Deep Learning (DL), and Generative AI (GenAI) has made the Graphics Processing Unit (GPU) the single most critical component in any modern computing infrastructure. Specifically, NVIDIA GPUs have cemented their position as the industry standard, not just due to raw computational power, but thanks to their comprehensive hardware and software ecosystem.

Choosing the right NVIDIA GPU is critical for maximizing efficiency, accelerating model training, and controlling costs. This comprehensive guide breaks down the essential NVIDIA GPU features and the top models for your AI ambitions.

Core NVIDIA Advantages for AI Workloads

NVIDIA’s dominance in AI is rooted in proprietary technology that optimizes the parallel computations essential for neural networks:

- Tensor Cores: These specialized processing units are the heart of NVIDIA’s AI performance. They are designed to efficiently handle the large-scale matrix multiplications that define deep learning, dramatically accelerating training and inference times for models like Transformers and Large Language Models (LLMs).

- CUDA Platform: The Compute Unified Device Architecture (CUDA) is NVIDIA’s parallel computing platform and programming model. It’s the essential software layer that allows developers to leverage the GPU’s power using familiar frameworks like PyTorch and TensorFlow. CUDA’s maturity and widespread adoption make it an industry standard.

- Massive VRAM (Video RAM): AI models, particularly LLMs and diffusion models for GenAI, require colossal amounts of memory to store model parameters and datasets. NVIDIA’s high-end GPUs offer High Bandwidth Memory (HBM/HBM2e/HBM3) or high-capacity GDDR6X, ensuring that the GPU isn’t bottlenecked by memory transfer speeds.

- NVLink: For high-end server deployments, NVLink provides a high-speed, direct interconnect between multiple GPUs, enabling massive scaling for training foundational models across many processors without CPU bottlenecks.

Top-Tier NVIDIA GPUs for AI: A Tiered Approach

The “best” GPU depends entirely on the scale, budget, and mission of your AI project. NVIDIA offers tailored solutions across three main tiers:

1. The Enterprise & Research Champions (Uncompromised Performance)

These GPUs are designed for data centers, large-scale training, and global inference deployment.

| GPU Model | Ideal Workload | Key Features |

| NVIDIA H100 (Hopper) | Training Foundational LLMs, Exascale HPC, AI Supercomputing. | Transformer Engine for unprecedented LLM acceleration, HBM3 Memory, massive FP8/FP16 performance, 4th Gen Tensor Cores. |

| NVIDIA A100 (Ampere) | Large-scale AI training, Versatile cloud & data center use, Multi-Instance GPU (MIG) support. | High HBM2e VRAM (up to 80GB), powerful 3rd Gen Tensor Cores, excellent for complex, large datasets. |

| NVIDIA RTX 6000 Ada | Professional AI workstations, High-fidelity GenAI/Rendering, Complex scientific visualization. | Up to 48GB GDDR6 ECC Memory, high core count, blower-style cooling ideal for dense, multi-GPU workstations. |

2. The Power Workstation & Prototyping Heroes (Performance/Price Balance)

These models offer fantastic value and performance for individual researchers, startups, and mid-sized team projects.

- NVIDIA RTX 4090: While a consumer card, its 24GB of GDDR6X VRAM and latest 4th-Gen Tensor Cores make it arguably the best performance-per-dollar GPU for small to medium-scale AI model training and rapid prototyping of GenAI applications.

- NVIDIA RTX A5000 / A6000 (Previous Gen Professional): These remain solid choices, offering large VRAM pools (up to 48GB) and the reliability of a professional-grade card, often at a lower entry price than the latest Ada models.

3. The Entry-Level & Enthusiast Options (Budget & Learning)

- NVIDIA RTX 4070 / 4080: Great for students, beginners, or running smaller local LLMs and image generation tasks. Their accessible price point and adequate VRAM (12GB+) make them strong starting points.

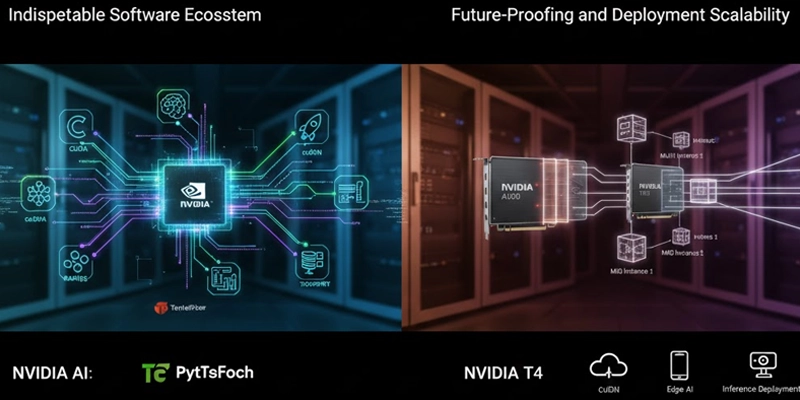

The Indispensable Software Ecosystem

While hardware specifications are essential, the true power multiplier for NVIDIA GPUs in AI lies in the robust and continuously evolving software stack. The CUDA platform is just the foundation; NVIDIA provides an array of tools and libraries that dramatically accelerate the AI development lifecycle.

The NVIDIA AI Enterprise suite is a key differentiator, offering validated, supported, and secure software tools specifically optimized for modern data science pipelines. This includes essential libraries like cuDNN (for deep neural networks), TensorRT (for high-performance inference optimization), and RAPIDS, which accelerates end-to-end data science workloads by running standard Python libraries (like Pandas and Scikit-learn) directly on the GPU. This optimization avoids common CPU-to-GPU memory bottlenecks, leading to orders of magnitude faster data preparation and feature engineering—critical steps that often consume over 80% of an AI project’s timeline.

Furthermore, NVIDIA actively collaborates with major cloud providers and open-source communities, ensuring that new models, frameworks, and deployment methods are optimized for their hardware from day one. This deep integration minimizes compatibility issues and ensures that your investment remains future-proof against the rapidly changing landscape of AI researc

Future-Proofing and Deployment Scalability

The choice of GPU is a long-term investment. Deploying NVIDIA hardware ensures maximum scalability—a fundamental requirement as your AI models transition from development to production. Features like the Multi-Instance GPU (MIG) capability on the A100 and newer architectures allow a single physical GPU to be partitioned into up to seven independent instances. This significantly improves utilization and reduces costs in cloud and multi-tenant environments by letting different teams or processes securely share the same hardware.

For inference deployment—where speed and cost efficiency are paramount—NVIDIA’s T-Series (like the T4 or L4) GPUs offer specialized, low-power solutions tailored for real-time applications. These inference accelerators balance high throughput with minimal latency, making them ideal for edge computing and cloud services where immediate responses are mandatory. By committing to the NVIDIA ecosystem, companies secure a validated path from rapid prototyping on an RTX card to large-scale, cost-optimized deployment on specialized T-series or flagship H100 hardware, streamlining the entire MLOps workflow.

Global Access: Your NVIDIA Partner in Dubai

Acquiring specialized, high-demand AI hardware like the NVIDIA H100 or RTX 6000 Ada can be complex, especially with global supply chain dynamics.

Our company (bahatec) specializes in sourcing and delivering the full range of NVIDIA GPUs—from the enterprise-grade H100 and A100 to the professional RTX A-series and the high-performance consumer RTX 4090—directly to your location.

We guarantee competitive pricing and reliable delivery for your AI infrastructure needs anywhere in the city of Dubai, UAE. Let us handle the logistics so you can focus on training your next breakthrough model.

Contact us todayto discuss your AI hardware requirements and secure the best NVIDIA GPUs in Dubai.